Abstract

Clinicians dedicate significant time to clinical documentation, incurring opportunity cost. Artificial intelligence (AI) tools promise to improve documentation quality and efficiency. This systematic review overviews peer-reviewed AI tools to understand how AI may reduce opportunity cost. PubMed, Embase, Scopus, and Web of Science databases were queried for original, English language research studies published during or before July 2024 that report a new development, application, and validation of an AI tool for improving clinical documentation. 129 studies were extracted from 673 candidate studies. AI tools improve documentation by structuring data, annotating notes, evaluating quality, identifying trends, and detecting errors. Other AI-enabled tools assist clinicians in real-time during office visits, but moderate accuracy precludes broad implementation. While a highly accurate end-to-end AI documentation assistant is not currently reported in peer-reviewed literature, existing techniques such as structuring data offer targeted improvements to clinical documentation workflows.

Keywords: artificial intelligence; documentation; automation; clinical guidelines; electronic health records; informatics

Introduction

Robust clinical documentation is critical for efficiency and quality of care, diagnosis related group (DRG) coding and reimbursement, and is required to be in compliance with the Joint Commission on Accreditations of Healthcare Organizations (JCAHO).1–3 Physicians spend 34 percent to 55 percent of their work day creating and reviewing clinical documentation in electronic health records (EHRs), translating to an opportunity cost of $90 to $140 billion annually in the United States — money spent on documentation time which could otherwise be spent on patient care.1,3,4 This clerical burden reduces time spent with patients, decreasing quality of care and contributing to clinician dissatisfaction and burnout.3,5 Clinical documentation improvement (CDI) initiatives have sought to reduce this burden and improve documentation qualtiy.6

Background

Despite the need for increased documentation efficiency and quality, CDI initiatives are not always successful.7 Artificial intelligence (AI) tools have been proposed as a means of improving the efficiency and quality of clinical documentation,8,9 and could reduce opportunity cost while producing JCAHO-compliant documentation and assisting coding and billing ventures.10 This study seeks to summarize available literature and describe how AI tools could be implemented more broadly to improve documentation efficiency, reduce documentation burden, increase reimbursement, and improve quality of care.

Methods

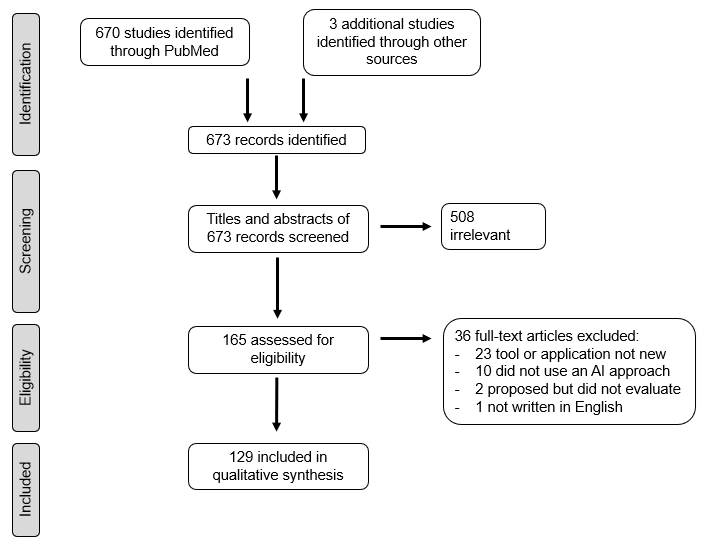

Best practices established in the Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) guidelines were used to search, organize, and review papers (Figure 1). As no patient information was involved, no Institutional Review Board was undertaken. The review was registered on the Open Science Framework registry. The PubMed, Embase, Scopus, and Web of Science databases were queried using the search strategies found in Figure 1. Articles were screened by three independent graders for the following inclusion criteria: full-text research article, written in English, describing novel development and application of AI tools to improve clinical documentation, and published between the earliest searchable year of each database and July 2024. Covidence software was used to organize the review (Veritas Innovation Ltd, Melbourne, Australia). The search results were last accessed on August 1, 2024. Exclusion criteria included studies which did not involve a new method or application of a tool, those which did not use an AI technique, and those which proposed methodology but did not validate an applicable tool. Disagreement between graders was resolved by discussion. Data extracted from studies include clinical data types, AI methods, tasks, reported effectiveness, and publication dates. Funding was not received for this review.

Results

Six hundred and seventy studies were extracted after querying PubMed, Embase, Scopus, and Web of Science and three additional studies were found in the references of related literature. After screening articles for relevance and eligibility according to inclusion and exclusion criteria, 129 studies were included in the narrative systematic review. A complete overview of studies may be found in Table 1. Twenty-three were excluded due to reporting a non-novel tool or application,11–33 10 did not use an AI approach,34–43 and two proposed but did not evaluate new methodology.44,45

The earliest included study was published in 2005, with the number of studies increasing from 2005 to 2022 (Figure 2). Notably, while 25 studies (an average of 2.08 per month) were published in 2022, only 18 studies (an average of 0.95 per month) were published from January 2023 to July 2024. (Figure 2) This 46 percent decrease in peer-reviewed studies per month was noted to coincide with the release of ChatGPT on November 30, 2022. Current AI tools improved clinical documentation by aiding clinicians or CDI initiatives in six domains expanded on below: tools aided clinicians by structuring data, annotating notes, detecting errors, or serving as AI documentation assistants; or aided CDI initiatives by evaluating documentation quality or identifying trends. Seventy-seven percent of studies aided clinicians, while 23 percent aided CDI initiatives (Figure 3). Most studies concerned data structuring algorithms (68 percent), followed by evaluating quality (18 percent), identifying trends (5 percent), detecting errors (3 percent), AI-enabled assistants (5 percent), and annotating notes (1 percent) (Figure 3). While the prevalence of studies in each domain varies, each has the potential to improve clinical documentation as discussed below.

Structuring Free-Text Data

Once the standard in documentation, free-text notes are flexible and allow clinicians to dictate or type. In contrast, structured data consists of pre-populated fields offering a less flexible but organized, searchable, and easily analyzed note format.46,47 AI tools have the potential bridge this gap, saving clinicians time by organizing text into paragraphs, presenting only the most relevant options in picklists, and automatically placing important information in structured data fields.

By necessity, clinic notes contain headings and an inherent organization on which an AI system can be applied. Rule-based approaches have been effective for various data structuring tasks, including classifying race with F-score = 0.911-0.984 (higher F-score indicating better performance),48,49 identifying confidential content in adolescent clinical notes,50 extracting social needs,51 and identifying stroke.52 Wang et al. developed an AI-guided dynamic picklist to display the most probable allergic reactions once an allergen is entered, resulting in 82.2 percent of the top 15 picklist choices being relevant to a given note.53 Beyond rule-based methods, Gao et al. developed an adaptive unsupervised algorithm which automatically summarizes patients’ main problems from daily progress notes with significant performance gains compared to alternative rule-based systems.54 To further enable data structuring, natural language processing (NLP) models were built by Ozonoff et al. and Allen et al. to extract patient safety events and social factors from EMR, with accuracy > 0.9 and positive predictive value from 0.95-0.97, respectively.55,56 Yoshida et al. improved the accuracy of automated gout flare ascertainment using least absolute shrinkage and selection operator methods in addition to a Medicare claims-only model.57

In recent years, neural networks have been increasingly used for AI CDI. Moen et al. used a neural network to organize free-text sentences from nursing notes into paragraphs and assign subject headings with 69 percent coherence.58 Deep learning and generative models have been applied to extract social determinants of health,59 classify acute renal failure with AUC = 0.84 (AUC = 1.0 indicates perfect classification),60 extract headache frequency,61 and identify autism spectrum disorders.62 Hua et al. identified psychosis episodes in psychiatric admission notes, showing that decision-tree and deep-learning methods outperformed rule-based classification.63

Algorithms were also developed to structure data for inter-department communication and even communication between institutions. For example, Visser et al.’s random forest model detected actionable findings and nonroutine communication in radiology reports with an AUC of 0.876.64 Kiser et al. developed models to group EHR transfers to improve transfer between institutions, yielding AUC difference-in-difference ranging from 0.005 to 0.248.65 Other studies have structured a wide variety of data, often with high accuracy; in total, 88 studies in the domain of structuring free text data were identified (Table 1). While promising, the above methods were not compared against the accuracy and efficiency of unassisted physicians, limiting external applicability.

Increasing Patient Understanding

As patient access to clinical documentation is increasingly mediated through online portals, medical terminology remains difficult for patients to understand.66,67 Chen et al. developed a system with rule-based and hybrid methods to link medical terms in clinical notes to lay definitions, which improved note comprehension among laypeople.68,21 Also towards this goal, Moramarco et al. used an ontology-based algorithm to convert sentences in medical language to simplified sentences in lay terms.69 In the future, these two methods of increasing patient understanding could increase patient adherence to treatment and decrease costs associated with nonadherence (Figure 1).21,68,70

Speech Recognition and Error Detection

AI-based speech recognition (AI-SR) programs promise to create the possibility of a “digital scribe” to decrease documentation burden. Such programs evaluated in the peer-reviewed literature are limited by an increased error rate while newer commercial programs have not been well-studied.71 Five studies reported a 19.0 to 92.0 percent decrease in mean documentation time with AI-SR, four studies reported increases of 13.4 to 50.0 percent, and three studies reported no significant difference.72–83 The ability of NLP tools to identify features of grammatically correct versus incorrect language shows promise for improving SR error rates by detecting errors in SR-generated notes.84–86 Lybarger et al. detected 67.0 percent of sentence-level edits and 45.0 percent of word-level edits in clinical notes generated with SR,84 while Voll et al. detected errors with 83.0 percent recall and 26.0 percent precision.86 Lee et al. developed a model which was able to detect missing vitrectomy codes with an AUC of 0.87 while detecting 66.5 percent of missed codes.87 Since high error rate could be rectified in the future, clinicians already appear interested in adopting AI-SR: a survey of 1731 clinicians at two academic medical centers reported high levels of satisfaction with AI-SR and the belief that SR improves efficiency.88 In total, four studies were identified which addressed the domain of speech recognition and error detection (Table 1).

Integrative Documentation Assistance

AI has also been proposed as a real-time assistant to improve documentation during patient encounters by recording patient encounter audio, supporting physician decisions, calculating risk scores, and suggesting clinical encounter codes.8,89 Wang et al. developed an AI-enabled tablet computer-based program that transcribes conversations from patient encounter audio, generates text from handwritten notes, automatically suggests clinical phenotypes and diagnoses, and incorporates the desired images, photographs, and drawings into clinical notes with 53.0 percent word error rate for the SR component and 83.0 percent precision and 51.0 percent recall for the phenotype recognizer.90 Mairittha et al. developed a dialogue-based system that increased average documentation speed by 15 percent with 96 percent accuracy.91 Kaufman et al. developed an AI tool to transcribe data from speech and convert the resulting text into a structured format, decreasing documentation time but causing a slight decrease in documentation quality.92 Xia et al. developed a speech-recognition based EHR with accuracy of 0.97 that reduced documentation time by 56 percent.93 Owens et al. reported that use of an ambient voice documentation assistant was associated with significantly decreased documentation time and provider disengagement, but not total provider burnout.94 Hartman et al. developed an integrated documentation assistant for automated generation of summary hospital course texts for neurology patients, 62 percent of which were judged to meet the standard of care by board-certified physicians.95 The increased efficiency of these studies is promising, but clinical implementation may be precluded by time spent correcting errors resulting from decreased documentation quality (Table 1).

Assessing Clinical Note Quality

A component of CDI initiatives is often manual chart review to assess clinical notes for timeliness, completeness, precision, and clarity.6,96 AI tools can assist in that end by recognizing the presence or absence of knowledge domains, social determinants of health, performance status, and topic discussion, prompting clinicians to make additional notes relating to a domain when needed (Table 1).97–101 In addition to these domains, note unclarity and redundant information comprise major problems in clinical documentation.102 Deng et al. used an NLP system to evaluate the quality (classified as high, intermediate, or low) of contrast allergy records based on ambiguous or specific concepts, finding that 69.1 percent were of low quality.103 Zhang et al. developed a method with conditional random fields and long-short term memory to classify information in clinical notes as new or redundant at the sentence level, achieving 83.0 percent recall and 74.0 percent precision.33,104 Similarly, Gabriel et al. developed an algorithm to classify pairs of notes as similar, exact copies, or common output (automatically generated notes such as electrocardiogram, laboratory results, etc.).105 Seinen et al. both detected and improved note quality, using semi-supervised models to refine unspecific condition codes in EHR notes.106 Zuo et al. also improved quality by standardizing clinical note format using a transformer-based approach.107 Regarding specific content standards, Barcelona et al. used natural language processing to identify stigmatizing language in labor and birth clinical notes.108

The concepts of content domains, note clarity, and redundancy do not account for changes in these domains over time. This meta-data element can be harnessed to improve clinical documentation as demonstrated by Bozkurt et al., who used an NLP pipeline to evaluate documented digital rectal examinations (DRE) by insurance provider and classify them as current, historical, hypothetical, deferred, or refused.15,109 Other studies identified time-sensitive documentation concerns including goals-of-care discussions at the end of life, patient priorities language, and adherence to care pathways in heart failure.110–112 Another model developed by Marshall et al. detected diagnostic uncertainty from EHR notes using a rule-based NLP with a sensitivity of 0.894 and specificity of 0.967.113 In total, 20 studies were identified which addressed the domain of clinical note quality (Table 1). Such algorithms could be used to prompt clinicians if protocols and procedures are not correctly documented within a given time after disease diagnosis.

Identifying documentation trends

While recognizing and tracking meta-data trends in documentation may improve documentation, it also has a role in intelligent modification of EHR systems and documentation policies. Young-Wolff et al. used an iterative rules-based NLP algorithm to demonstrate that electronic nicotine delivery system (ENDS) documentation increased over nine years; the team recommend an ENDS structured field be added to the EHR.114 Since clinicians vary in their documentation styles, Gong et al. extracted a “gold standard” style by evaluating note-level and user-level production patterns from clinical note metadata with unsupervised learning. Their results implied uninterrupted, morning charting could improve efficiency.115

Besides individual styles, documentation is complicated by health system factors such as heterogeneous medical forms and many compartmentalized specialties.20,116 AI may collimate these trends leading to intelligently standardized forms and a more efficient system. Dugas et al. automatically compared and visualized trends in medical form similarity using semantic enrichment, rule-based comparison, grid images, and dendrograms.116 Modre-Osprian et al. analyzed topic trends in notes from a collaborative health information network, yielding insights about wireless device usage that improved network functioning.117 Further studies used AI to find trends in EHR audit logs and utilization patterns of notes, allowing efficiency trends to be identified.118,119 In total, six studies were identified which addressed the domain of identifying documentation trends (Table 1). By overviewing meta-data trends in both individual clinical documentation patterns and rapidly changing health systems, AI tools could aid system optimization as medical infrastructure changes and care is delivered in new, increasingly specialized ways.

Discussion

As reviewed above, current AI tools improved clinical documentation by structuring data, annotating notes, and providing real-time assistance. Other features of AI CDI tools include assessing clinical note quality based on concept domains and providing insight into hospital systems and provider practices. A truly practical comprehensive clinical AI assistant has not yet been reported in the peer-reviewed literature to our knowledge, but current AI tools could confer specific improvements to documentation workflows.

To overcome limitations in generalizability, future work should involve larger datasets and broader training data availability. AI processing of large amounts of data would require large computational processing power, a requirement which may become feasible as computational power continues to increase in the future.9,120 This necessitates carefully regulated and secure computing systems which must also account for documentation variations between geographic regions, institutions, and EHRs.121 AI-based systems that promote documentation inter-operability could play a role in overcoming these challenges by creating larger unified training datasets.65,107 While a widely generalizable AI system could possibly be trained, such data is often proprietary and not readily shared. Transfer learning techniques, which apply previously learned information to a new situation or context, may bridge this gap, enabled by collaboration and data sharing between health systems.122 Lexical variations can be overcome either by semantic similarity in rule-based NLP or implementing machine learning techniques.121

Legal and ethical concerns relating to encounter recording and AI processing must also be addressed simultaneously with these changes for these systems to be successful long-term.123 Patients may have privacy concerns regarding the automatic collection, storage, and processing of encounter data, and the liability implications of AI-assisted clinical documentation, such as where blame falls when a documentation error occurs, are currently unclear. Ethical concerns raised in the literature include the nature of informed consent, algorithmic fairness and biases, data privacy, safety, and transparency.124 Legal challenges include safety, effectiveness, liability, data protection, privacy, cybersecurity, and intellectual property.124

For AI CDI systems to implemented clinically, they must increase efficiency without sacrificing accuracy.71 In some cases, time spent fixing errors produced by AI outweighs time saved using the AI tool.71 The accuracy of AI-assisted versus clinician-generated notes has not been widely compared,84 and there is also a lack of studies investigating clinical outcomes and patient care which must be assessed before widespread AI CDI implementation.90–95

While further studies of AI CDI tools are needed, this systematic review is the first to our knowledge to highlight a decrease in peer-reviewed AI CDI studies published following the release of ChatGPT on November 30, 2022.125 Reasons for this trend are not entirely clear, but may be due to researchers publishing on preprint servers amidst rapidly advancing techniques or developing proprietary models without publishing. The advent of large transformer language models shows promise, but rigorous peer-reviewed evaluation of proprietary models for improving clinical documentation is lacking.126,127

Limitations and Future Studies

Strengths of this narrative systematic review include that it presents AI tools for clinical documentation improvement in the context of medical practice and health systems, and that it is the first study to do so comprehensively. This study is subject to several limitations: the relevance of studies was determined by the authors, the efficacy of all tools was not objectively compared, commercial programs not studied in the peer-reviewed literature could not be evaluated, and studies may exist outside of the queried databases. Concerns of cost, physician and hospital system acceptance, and potential job loss regarding AI CDI tools are not negligible. However, this is beyond the scope of this review which set out to report solely on methods and formats of improving documentation.

Conclusion

While current AI tools offer targeted improvements to clinical documentation processes, moderately high error rates preclude the broad use of a comprehensive AI documentation assistant. While large language models have the potential to greatly reduce error rates, many of these models are proprietary and not well-studied in the peer-reviewed literature. In the future, this hurdle may be overcome with further rigorous tool evaluation and development in direct consultation with physicians, as well as robust discussion of the legal and ethical ramifications of AI CDI tools.

References

1. Arndt BG, Beasley JW, Watkinson MD, et al. Tethered to the EHR: Primary Care Physician Workload Assessment Using EHR Event Log Data and Time-Motion Observations. Ann Fam Med. 2017;15(5):419-426. doi:10.1370/afm.2121

2. Blanes-Selva V, Tortajada S, Vilar R, Valdivieso B, Garcia-Gomez J. Machine Learning-Based Identification of Obesity from Positive and Unlabelled Electronic Health Records. In: Vol 270.; 2020:864-868. doi:10.3233/SHTI200284

3. Sinsky C, Colligan L, Li L, et al. Allocation of Physician Time in Ambulatory Practice: A Time and Motion Study in 4 Specialties. Annals of Internal Medicine. Published online September 6, 2016. Accessed January 1, 2022. https://www.acpjournals.org/doi/abs/10.7326/M16-0961

4. Tai-Seale M, Olson CW, Li J, et al. Electronic Health Record Logs Indicate That Physicians Split Time Evenly Between Seeing Patients And Desktop Medicine. Health Aff (Millwood). 2017;36(4):655-662. doi:10.1377/hlthaff.2016.0811

5. Shanafelt TD, Dyrbye LN, Sinsky C, et al. Relationship Between Clerical Burden and Characteristics of the Electronic Environment With Physician Burnout and Professional Satisfaction. Mayo Clinic Proceedings. 2016;91(7):836-848. doi:10.1016/j.mayocp.2016.05.007

6. Towers AL. Clinical Documentation Improvement—A Physician Perspective: Insider Tips for getting Physician Participation in CDI Programs. Journal of AHIMA. 2013;84(7):34-41.

7. Dehghan M, Dehghan D, Sheikhrabori A, Sadeghi M, Jalalian M. Quality improvement in clinical documentation: does clinical governance work? J Multidiscip Healthc. 2013;6:441-450. doi:10.2147/JMDH.S53252

8. Lin SY, Shanafelt TD, Asch SM. Reimagining Clinical Documentation With Artificial Intelligence. Mayo Clinic Proceedings. 2018;93(5):563-565. doi:10.1016/j.mayocp.2018.02.016

9. Luh JY, Thompson RF, Lin S. Clinical Documentation and Patient Care Using Artificial Intelligence in Radiation Oncology. Journal of the American College of Radiology. 2019;16(9):1343-1346. doi:10.1016/j.jacr.2019.05.044

10. Campbell S, Giadresco K. Computer-assisted clinical coding: A narrative review of the literature on its benefits, limitations, implementation and impact on clinical coding professionals. HIM J. 2020;49(1):5-18. doi:10.1177/1833358319851305

11. Agaronnik ND, Lindvall C, El-Jawahri A, He W, Iezzoni LI. Challenges of Developing a Natural Language Processing Method With Electronic Health Records to Identify Persons With Chronic Mobility Disability. Archives of Physical Medicine and Rehabilitation. 2020;101(10):1739-1746. doi:10.1016/j.apmr.2020.04.024

12. Barrett N, Weber-Jahnke JH. Applying Natural Language Processing Toolkits to Electronic Health Records – An Experience Report. In: Advances in Information Technology and Communication in Health. Vol 143. Studies in Health Technology and Informatics. IOS Press; 2009:441-446.

13. Blackley SV, Schubert VD, Goss FR, Al Assad W, Garabedian PM, Zhou L. Physician use of speech recognition versus typing in clinical documentation: A controlled observational study. International Journal of Medical Informatics. 2020;141:104178. doi:10.1016/j.ijmedinf.2020.104178

14. Blanco A, Perez A, Casillas A. Exploiting ICD Hierarchy for Classification of EHRs in Spanish Through Multi-Task Transformers. IEEE Journal of Biomedical and Health Informatics. 2022;26(3):1374-1383. doi:10.1109/JBHI.2021.3112130

15. Bozkurt S, Kan KM, Ferrari MK, et al. Is it possible to automatically assess pretreatment digital rectal examination documentation using natural language processing? A single-centre retrospective study. BMJ Open. 2019;9(7):e027182. doi:10.1136/bmjopen-2018-027182

16. Friedman C. Discovering Novel Adverse Drug Events Using Natural Language Processing and Mining of the Electronic Health Record. In: Combi C, Shahar Y, Abu-Hanna A, eds. Artificial Intelligence in Medicine. Vol 5651. Lecture Notes in Computer Science. Springer Berlin Heidelberg; 2009:1-5. doi:10.1007/978-3-642-02976-9_1

17. Guo Y, Al-Garadi MA, Book WM, et al. Supervised Text Classification System Detects Fontan Patients in Electronic Records With Higher Accuracy Than ICD Codes. J Am Heart Assoc. 2023;12(13):e030046. doi:10.1161/JAHA.123.030046

18. He J, Mark L, Hilton C, et al. A Comparison Of Structured Data Query Methods Versus Natural Language Processing To Identify Metastatic Melanoma Cases From Electronic Health Records. Int J Computational Medicine and Healthcare. 2019;1(1):101-111.

19. Komkov AA, Mazaev VP, Ryazanova SV, et al. Application of the program for artificial intelligence analytics of paper text and segmentation by specified parameters in clinical practice. Cardiovascular Therapy and Prevention (Russian Federation). 2022;21(12). doi:10.15829/1728-8800-2022-3458

20. Krumm R, Semjonow A, Tio J, et al. The need for harmonized structured documentation and chances of secondary use – Results of a systematic analysis with automated form comparison for prostate and breast cancer. Journal of Biomedical Informatics. 2014;51:86-99. doi:10.1016/j.jbi.2014.04.008

21. Lalor JP, Woolf B, Yu H. Improving Electronic Health Record Note Comprehension With NoteAid: Randomized Trial of Electronic Health Record Note Comprehension Interventions With Crowdsourced Workers. J Med Internet Res. 2019;21(1):e10793. doi:10.2196/10793

22. Lalor JP, Hu W, Tran M, Wu H, Mazor KM, Yu H. Evaluating the Effectiveness of NoteAid in a Community Hospital Setting: Randomized Trial of Electronic Health Record Note Comprehension Interventions With Patients. J Med Internet Res. 2021;23(5):e26354. doi:10.2196/26354

23. Liang C, Kelly S, Shen R, et al. Predicting Wilson disease progression using machine learning with real-world electronic health records. PHARMACOEPIDEMIOLOGY AND DRUG SAFETY. 2022;31:63-64.

24. Liao KP, Cai T, Savova GK, et al. Development of phenotype algorithms using electronic medical records and incorporating natural language processing. BMJ. 2015;350(apr24 11):h1885-h1885. doi:10.1136/bmj.h1885

25. Marshall T, Nickels L, Edgerton E, Brady P, Lee J, Hagedorn P. Linguistic Indicators of Diagnostic Uncertainty in Clinical Documentation for Hospitalized Children. Diagnosis. 2022;9(2):eA85-eA86. doi:10.1515/dx-2022-0024

26. Mohsen F, Ali H, El Hajj N, Shah Z. Artificial intelligence-based methods for fusion of electronic health records and imaging data. SCIENTIFIC REPORTS. 2022;12(1). doi:10.1038/s41598-022-22514-4

27. Shah DR, Ajay Dhawan D, Shah SN, Rajesh Shah P, Francis S. Panacea: A Novel Architecture for Electronic Health Records System using Blockchain and Machine Learning. In: 2022 Second International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT). IEEE; 2022:1-7. doi:10.1109/ICAECT54875.2022.9807928

28. Shimazui T, Nakada T aki, Kuroiwa S, Toyama Y, Oda S. Speech recognition shortens the recording time of prehospital medical documentation. The American Journal of Emergency Medicine. 2021;49:414-416. doi:10.1016/j.ajem.2021.02.025

29. Thompson HM, Sharma B, Bhalla S, et al. Bias and fairness assessment of a natural language processing opioid misuse classifier: detection and mitigation of electronic health record data disadvantages across racial subgroups. Journal of the American Medical Informatics Association. 2021;28(11):2393-2403. doi:10.1093/jamia/ocab148

30. Voytovich L, Greenberg C. Natural Language Processing: Practical Applications in Medicine and Investigation of Contextual Autocomplete. Acta Neurochirurgica, Supplementum. 2022;134((Voytovich L., leahvoy@seas.upenn.edu; Greenberg C.) Department of Computer and Information Science, School of Engineering and Applied Science, University of Pennsylvania, Philadelphia, PA, United States):207-214. doi:10.1007/978-3-030-85292-4_24

31. Wang J, Yu S, Davoudi A, Mowery DL. A Preliminary Characterization of Canonicalized and Non-Canonicalized Section Headers Across Variable Clinical Note Types. AMIA Annu Symp Proc. 2020;2020:1268-1276.

32. Yusufov M, Pirl WF, Braun I, Tulsky JA, Lindvall C. Natural Language Processing for Computer-Assisted Chart Review to Assess Documentation of Substance use and Psychopathology in Heart Failure Patients Awaiting Cardiac Resynchronization Therapy. Journal of Pain and Symptom Management. 2022;64(4):400-409. doi:10.1016/j.jpainsymman.2022.06.007

33. Zhang R, Pakhomov SVS, Arsoniadis EG, Lee JT, Wang Y, Melton GB. Detecting clinically relevant new information in clinical notes across specialties and settings. BMC Med Inform Decis Mak. 2017;17(Suppl 2):68. doi:10.1186/s12911-017-0464-y

34. Chen ES, Carter EW, Sarkar IN, Winden TJ, Melton GB. Examining the use, contents, and quality of free-text tobacco use documentation in the Electronic Health Record. AMIA Annu Symp Proc. 2014;2014:366-374.

35. Clapp MA, McCoy TH, James KE, Kaimal AJ, Perlis RH. The utility of electronic health record data for identifying postpartum hemorrhage. American Journal of Obstetrics & Gynecology MFM. 2021;3(2):100305. doi:10.1016/j.ajogmf.2020.100305

36. Ehrenfeld JM, Gottlieb KG, Beach LB, Monahan SE, Fabbri D. Development of a Natural Language Processing Algorithm to Identify and Evaluate Transgender Patients in Electronic Health Record System. Ethn Dis. 2019;29(Supp2):441-450. doi:10.18865/ed.29.S2.441

37. Heale B, Overby C, Del Fiol G, et al. Integrating Genomic Resources with Electronic Health Records using the HL7 Infobutton Standard. Appl Clin Inform. 2016;07(03):817-831. doi:10.4338/ACI-2016-04-RA-0058

38. Mantey EA, Zhou C, Srividhya SR, Jain SK, Sundaravadivazhagan B. Integrated Blockchain-Deep Learning Approach for Analyzing the Electronic Health Records Recommender System. Frontiers in public health. 2022;10((Mantey E.A.; Zhou C.) School of Computer Science and Communication Engineering, Jiangsu University, Zhenjiang, China(Srividhya S.R.) Sathyabama Institute of Science and Technology, Chennai, India(Jain S.K.) Department of Computer Science, Medi-Caps Unive):905265. doi:10.3389/fpubh.2022.905265

39. Noussa-Yao J, Boussadi A, Richard M, Heudes D, Degoulet P. Using a snowflake data model and autocompletion to support diagnostic coding in acute care hospitals. Stud Health Technol Inform. 2015;210:334-338.

40. Pierce EJ, Boytsov NN, Vasey JJ, et al. A Qualitative Analysis of Provider Notes of Atopic Dermatitis-Related Visits Using Natural Language Processing Methods. Dermatol Ther (Heidelb). 2021;11(4):1305-1318. doi:10.1007/s13555-021-00553-5

41. Porcelli PJ, Lobach DF. Integration of clinical decision support with on-line encounter documentation for well child care at the point of care. Proc AMIA Symp. Published online 1999:599-603.

42. Vawdrey DK. Assessing usage patterns of electronic clinical documentation templates. AMIA Annu Symp Proc. Published online November 6, 2008:758-762.

43. Zvára K, Tomecková M, Peleška J, Svátek V, Zvárová J. Tool-supported Interactive Correction and Semantic Annotation of Narrative Clinical Reports. Methods Inf Med. 2017;56(03):217-229. doi:10.3414/ME16-01-0083

44. Gicquel Q, Tvardik N, Bouvry C, et al. Annotation methods to develop and evaluate an expert system based on natural language processing in electronic medical records. In: MEDINFO 2015: eHealth-Enabled Health. Vol 2016. IOS Press; 2015:1067.

45. Gurupur VP, Shelleh M. Machine Learning Analysis for Data Incompleteness (MADI): Analyzing the Data Completeness of Patient Records Using a Random Variable Approach to Predict the Incompleteness of Electronic Health Records. IEEE Access. 2021;9:95994-96001. doi:10.1109/ACCESS.2021.3095240

46. Hyppönen H, Saranto K, Vuokko R, et al. Impacts of structuring the electronic health record: A systematic review protocol and results of previous reviews. International Journal of Medical Informatics. 2014;83(3):159-169. doi:10.1016/j.ijmedinf.2013.11.006

47. Rosenbloom ST, Denny JC, Xu H, Lorenzi N, Stead WW, Johnson KB. Data from clinical notes: a perspective on the tension between structure and flexible documentation. J Am Med Inform Assoc. 2011;18(2):181-186. doi:10.1136/jamia.2010.007237

48. Klinger EV, Carlini SV, Gonzalez I, et al. Accuracy of Race, Ethnicity, and Language Preference in an Electronic Health Record. J Gen Intern Med. 2015;30(6):719-723. doi:10.1007/s11606-014-3102-8

49. Sholle ET, Pinheiro LC, Adekkanattu P, et al. Underserved populations with missing race ethnicity data differ significantly from those with structured race/ethnicity documentation. J Am Med Inform Assoc. 2019;26(8-9):722-729. doi:10.1093/jamia/ocz040

50. Rabbani N, Bedgood M, Brown C, et al. A Natural Language Processing Model to Identify Confidential Content in Adolescent Clinical Notes. Appl Clin Inform. 2023;14(03):400-407. doi:10.1055/a-2051-9764

51. Gray GM, Zirikly A, Ahumada LM, et al. Application of natural language processing to identify social needs from patient medical notes: development and assessment of a scalable, performant, and rule-based model in an integrated healthcare delivery system. JAMIA Open. 2023;6(4):ooad085. doi:10.1093/jamiaopen/ooad085

52. Yang A, Kamien S, Davoudi A, et al. Relation Detection to Identify Stroke Assertions from Clinical Notes Using Natural Language Processing. In: MEDINFO 2023 — The Future Is Accessible. IOS Press; 2024:619-623. doi:10.3233/SHTI231039

53. Wang L, Blackley SV, Blumenthal KG, et al. A dynamic reaction picklist for improving allergy reaction documentation in the electronic health record. J Am Med Inform Assoc. 2020;27(6):917-923. doi:10.1093/jamia/ocaa042

54. Gao Y, Dligach D, Miller T, Xu D, Churpek MMM, Afshar M. Summarizing Patients’ Problems from Hospital Progress Notes Using Pre-trained Sequence-to-Sequence Models. In: Proceedings of the 29th International Conference on Computational Linguistics. International Committee on Computational Linguistics; 2022:2979-2991. https://aclanthology.org/2022.coling-1.264

55. Allen KS, Hood DR, Cummins J, Kasturi S, Mendonca EA, Vest JR. Natural language processing-driven state machines to extract social factors from unstructured clinical documentation. JAMIA Open. 2023;6(2):ooad024. doi:10.1093/jamiaopen/ooad024

56. Ozonoff A, Milliren CE, Fournier K, et al. Electronic surveillance of patient safety events using natural language processing. Health Informatics J. 2022;28(4):14604582221132429. doi:10.1177/14604582221132429

57. Yoshida K, Cai T, Bessette LG, et al. Improving the accuracy of automated gout flare ascertainment using natural language processing of electronic health records and linked Medicare claims data. Pharmacoepidemiology and Drug Safety. 2024;33(1):e5684. doi:10.1002/pds.5684

58. Moen H, Hakala K, Peltonen LM, et al. Assisting nurses in care documentation: from automated sentence classification to coherent document structures with subject headings. J Biomed Semantics. 2020;11:10. doi:10.1186/s13326-020-00229-7

59. Lybarger K, Dobbins NJ, Long R, et al. Leveraging natural language processing to augment structured social determinants of health data in the electronic health record. J Am Med Inform Assoc. 2023;30(8):1389-1397. doi:10.1093/jamia/ocad073

60. Litake O, Park BH, Tully JL, Gabriel RA. Constructing synthetic datasets with generative artificial intelligence to train large language models to classify acute renal failure from clinical notes. Journal of the American Medical Informatics Association. 2024;31(6):1404-1410. doi:10.1093/jamia/ocae081

61. Chiang CC, Luo M, Dumkrieger G, et al. A large language model–based generative natural language processing framework fine-tuned on clinical notes accurately extracts headache frequency from electronic health records. Headache: The Journal of Head and Face Pain. 2024;64(4):400-409. doi:10.1111/head.14702

62. Leroy G, Andrews JG, KeAlohi-Preece M, et al. Transparent deep learning to identify autism spectrum disorders (ASD) in EHR using clinical notes. Journal of the American Medical Informatics Association. 2024;31(6):1313-1321. doi:10.1093/jamia/ocae080

63. Hua Y, Blackley SV, Shinn AK, Skinner JP, Moran LV, Zhou L. Identifying Psychosis Episodes in Psychiatric Admission Notes via Rule-based Methods, Machine Learning, and Pre-Trained Language Models. Published online March 19, 2024:2024.03.18.24304475. doi:10.1101/2024.03.18.24304475

64. Visser JJ, de Vries M, Kors JA. Automatic detection of actionable findings and communication mentions in radiology reports using natural language processing. European Radiology. 2022;32(6):3996-4002. doi:10.1007/s00330-021-08467-8

65. Kiser AC, Eilbeck K, Ferraro JP, Skarda DE, Samore MH, Bucher B. Standard Vocabularies to Improve Machine Learning Model Transferability With Electronic Health Record Data: Retrospective Cohort Study Using Health Care-Associated Infection. JMIR Med Inform. 2022;10(8):e39057. doi:10.2196/39057

66. Irizarry T, Dabbs AD, Curran CR. Patient Portals and Patient Engagement: A State of the Science Review. Journal of Medical Internet Research. 2015;17(6):e4255. doi:10.2196/jmir.4255

67. Pyper C, Amery J, Watson M, Crook C. Patients’ experiences when accessing their on-line electronic patient records in primary care. British Journal of General Practice. 2004;54(498):38-43.

68. Chen J, Druhl E, Polepalli Ramesh B, et al. A Natural Language Processing System That Links Medical Terms in Electronic Health Record Notes to Lay Definitions: System Development Using Physician Reviews. J Med Internet Res. 2018;20(1):e26. doi:10.2196/jmir.8669

69. Moramarco F, Juric D, Savkov A, et al. Towards more patient friendly clinical notes through language models and ontologies. AMIA Annu Symp Proc. 2021;2021:881-890.

70. Burnier M, Egan BM. Adherence in Hypertension. Circulation Research. 2019;124(7):1124-1140. doi:10.1161/CIRCRESAHA.118.313220

71. Blackley SV, Huynh J, Wang L, Korach Z, Zhou L. Speech recognition for clinical documentation from 1990 to 2018: a systematic review. J Am Med Inform Assoc. 2019;26(4):324-338. doi:10.1093/jamia/ocy179

72. Klatt EC. Voice-activated dictation for autopsy pathology. Computers in Biology and Medicine. 1991;21(6):429-433. doi:10.1016/0010-4825(91)90044-A

73. Vorbeck F, Ba-Ssalamah A, Kettenbach J, Huebsch P. Report generation using digital speech recognition in radiology. European Radiology. 2000;10(12):1976-1982. doi:10.1007/s003300000459

74. Rana DS, Hurst G, Shepstone L, Pilling J, Cockburn J, Crawford M. Voice recognition for radiology reporting: Is it good enough? Clinical Radiology. 2005;60(11):1205-1212. doi:10.1016/j.crad.2005.07.002

75. Alapetite A. Speech recognition for the anaesthesia record during crisis scenarios. International Journal of Medical Informatics. 2008;77(7):448-460. doi:10.1016/j.ijmedinf.2007.08.007

76. Sánchez MJG, Torres JMF, Calderón LP, Cervera JN. Application of Business Process Management to drive the deployment of a speech recognition system in a healthcare organization. Studies in health technology and informatics. 2008;136:511-516.

77. Feldman CA, Stevens D. Pilot study on the feasibility of a computerized speech recognition charting system. Community Dentistry and Oral Epidemiology. 1990;18(4):213-215. doi:10.1111/j.1600-0528.1990.tb00060.x

78. Bhan SN, Coblentz CL, Norman GR, Ali SH. Effect of Voice Recognition on Radiologist Reporting Time. Canadian Association of Radiologists Journal. 2008;59(4):203-209.

79. Pezzullo JA, Tung GA, Rogg JM, Davis LM, Brody JM, Mayo-Smith WW. Voice Recognition Dictation: Radiologist as Transcriptionist. J Digit Imaging. 2008;21(4):384-389. doi:10.1007/s10278-007-9039-2

80. Segrelles JD, Medina R, Blanquer I, Martí-Bonmatí L. Increasing the Efficiency on Producing Radiology Reports for Breast Cancer Diagnosis by Means of Structured Reports. Methods Inf Med. 2017;56(03):248-260. doi:10.3414/ME16-01-0091

81. Hawkins CM, Hall S, Hardin J, Salisbury S, Towbin AJ. Prepopulated Radiology Report Templates: A Prospective Analysis of Error Rate and Turnaround Time. J Digit Imaging. 2012;25(4):504-511. doi:10.1007/s10278-012-9455-9

82. Cruz JE dela, Shabosky JC, Albrecht M, et al. Typed Versus Voice Recognition for Data Entry in Electronic Health Records: Emergency Physician Time Use and Interruptions. Western Journal of Emergency Medicine. 2014;15(4):541. doi:10.5811/westjem.2014.3.19658

83. Hanna TN, Shekhani H, Maddu K, Zhang C, Chen Z, Johnson JO. Structured report compliance: effect on audio dictation time, report length, and total radiologist study time. Emerg Radiol. 2016;23(5):449-453. doi:10.1007/s10140-016-1418-x

84. Lybarger K, Ostendorf M, Yetisgen M. Automatically Detecting Likely Edits in Clinical Notes Created Using Automatic Speech Recognition. AMIA Annu Symp Proc. 2018;2017:1186-1195.

85. Minn MJ, Zandieh AR, Filice RW. Improving Radiology Report Quality by Rapidly Notifying Radiologist of Report Errors. J Digit Imaging. 2015;28(4):492-498. doi:10.1007/s10278-015-9781-9

86. Voll K, Atkins S, Forster B. Improving the Utility of Speech Recognition Through Error Detection. J Digit Imaging. 2008;21(4):371-377. doi:10.1007/s10278-007-9034-7

87. Lee YM, Bacchi S, Sia D, Casson RJ, Chan W. Optimising vitrectomy operation note coding with machine learning. Clin Exp Ophthalmol. 2023;51(6):577-584. doi:10.1111/ceo.14257

88. Goss FR, Blackley SV, Ortega CA, et al. A clinician survey of using speech recognition for clinical documentation in the electronic health record. International Journal of Medical Informatics. 2019;130:103938. doi:10.1016/j.ijmedinf.2019.07.017

89. Deliberato RO, Celi LA, Stone DJ. Clinical Note Creation, Binning, and Artificial Intelligence. JMIR Med Inform. 2017;5(3):e24. doi:10.2196/medinform.7627

90. Wang J, Yang J, Zhang H, et al. PhenoPad: Building AI enabled note-taking interfaces for patient encounters. npj Digit Med. 2022;5(1):12. doi:10.1038/s41746-021-00555-9

91. Mairittha T., Mairittha N., Inoue S. Evaluating a Spoken Dialogue System for Recording Systems of Nursing Care. Sensors (Basel, Switzerland). 2019;19(17). doi:10.3390/s19173736

92. Kaufman D, Sheehan B, Stetson P, et al. Natural Language Processing-Enabled and Conventional Data Capture Methods for Input to Electronic Health Records: A Comparative Usability Study. JMIR Medical Informatics. 2016;4(4):21-37. doi:10.2196/medinform.5544

93. Xia X, Ma Y, Luo Y, Lu J. An online intelligent electronic medical record system via speech recognition. International Journal of Distributed Sensor Networks. 2022;18(11):15501329221134479. doi:10.1177/15501329221134479

94. Owens LM, Wilda JJ, Hahn PY, Koehler T, Fletcher JJ. The association between use of ambient voice technology documentation during primary care patient encounters, documentation burden, and provider burnout. Family Practice. 2024;41(2):86-91. doi:10.1093/fampra/cmad092

95. Hartman VC, Bapat SS, Weiner MG, Navi BB, Sholle ET, Campion TR Jr. A method to automate the discharge summary hospital course for neurology patients. Journal of the American Medical Informatics Association. 2023;30(12):1995-2003. doi:10.1093/jamia/ocad177

96. Aiello FA, Judelson DR, Durgin JM, et al. A physician-led initiative to improve clinical documentation results in improved health care documentation, case mix index, and increased contribution margin. Journal of Vascular Surgery. 2018;68(5):1524-1532. doi:10.1016/j.jvs.2018.02.038

97. Kshatriya BSA, Sagheb E, Wi CI, et al. Deep Learning Identification of Asthma Inhaler Techniques in Clinical Notes. Proceedings (IEEE Int Conf Bioinformatics Biomed). 2020;2020:10.1109/bibm49941.2020.9313224. doi:10.1109/bibm49941.2020.9313224

98. Stemerman R, Arguello J, Brice J, Krishnamurthy A, Houston M, Kitzmiller R. Identification of social determinants of health using multi-label classification of electronic health record clinical notes. JAMIA Open. 2021;4(3):1-11. doi:10.1093/jamiaopen/ooaa069

99. Agaronnik N, Lindvall C, El-Jawahri A, He W, Iezzoni L. Use of Natural Language Processing to Assess Frequency of Functional Status Documentation for Patients Newly Diagnosed With Colorectal Cancer. JAMA Oncol. 2020;6(10):1628-1630. doi:10.1001/jamaoncol.2020.2708

100. Tseng E, Schwartz JL, Rouhizadeh M, Maruthur NM. Analysis of Primary Care Provider Electronic Health Record Notes for Discussions of Prediabetes Using Natural Language Processing Methods. J GEN INTERN MED. Published online January 19, 2021. doi:10.1007/s11606-020-06400-1

101. Denny JC, Spickard A, Speltz PJ, Porier R, Rosenstiel DE, Powers JS. Using natural language processing to provide personalized learning opportunities from trainee clinical notes. Journal of Biomedical Informatics. 2015;56:292-299. doi:10.1016/j.jbi.2015.06.004

102. Markel A. Copy and Paste of Electronic Health Records: A Modern Medical Illness. The American Journal of Medicine. 2010;123(5):e9. doi:10.1016/j.amjmed.2009.10.012

103. Deng F, Li MD, Wong A, et al. Quality of Documentation of Contrast Agent Allergies in Electronic Health Records. Journal of the American College of Radiology. 2019;16(8):1027-1035. doi:10.1016/j.jacr.2019.01.027

104. Zhang R, Pakhomov SV, Lee JT, Melton B. Using Language Models to Identify Relevant New Information in Inpatient Clinical Notes. 2014;2014:1268-1276.

105. Gabriel RA, Kuo TT, McAuley J, Hsu CN. Identifying and characterizing highly similar notes in big clinical note datasets. Journal of Biomedical Informatics. 2018;82:63-69. doi:10.1016/j.jbi.2018.04.009

106. Seinen TM, Kors JA, van Mulligen EM, Fridgeirsson EA, Verhamme KM, Rijnbeek PR. Using clinical text to refine unspecific condition codes in Dutch general practitioner EHR data. International Journal of Medical Informatics. 2024;189:105506. doi:10.1016/j.ijmedinf.2024.105506

107. Zuo X, Zhou Y, Duke J, et al. Standardizing Multi-site Clinical Note Titles to LOINC Document Ontology: A Transformer-based Approach. AMIA Annu Symp Proc. 2023;2023:834-843.

108. Barcelona V, Scharp D, Moen H, et al. Using Natural Language Processing to Identify Stigmatizing Language in Labor and Birth Clinical Notes. Matern Child Health J. 2024;28(3):578-586. doi:10.1007/s10995-023-03857-4

109. Bozkurt S, Park JI, Kan KM, et al. An Automated Feature Engineering for Digital Rectal Examination Documentation using Natural Language Processing. AMIA Annu Symp Proc. 2018;2018:288-294.

110. Li X, Liu H, Zhang S, et al. Automatic Variance Analysis of Multistage Care Pathways. Vol 205.; 2014:719. doi:10.3233/978-1-61499-432-9-715

111. Razjouyan J, Freytag J, Dindo L, et al. Measuring Adoption of Patient Priorities-Aligned Care Using Natural Language Processing of Electronic Health Records: Development and Validation of the Model. JMIR MEDICAL INFORMATICS. 2021;9(2). doi:10.2196/18756

112. Steiner J.M., Morse C., Lee R.Y., Curtis J.R., Engelberg R.A. Sensitivity and Specificity of a Machine Learning Algorithm to Identify Goals-of-care Documentation for Adults With Congenital Heart Disease at the End of Life. Journal of Pain and Symptom Management. 2020;60(3):e33-e36. doi:10.1016/j.jpainsymman.2020.06.018

113. Marshall TL, Nickels LC, Brady PW, Edgerton EJ, Lee JJ, Hagedorn PA. Developing a machine learning model to detect diagnostic uncertainty in clinical documentation. Journal of Hospital Medicine. 2023;18(5):405-412. doi:10.1002/jhm.13080

114. Young-Wolff KC, Klebaner D, Folck B, et al. Do you vape? Leveraging electronic health records to assess clinician documentation of electronic nicotine delivery system use among adolescents and adults. Prev Med. 2017;105:32-36. doi:10.1016/j.ypmed.2017.08.009

115. Gong JJ, Soleimani H, Murray SG, Adler-Milstein J. Characterizing styles of clinical note production and relationship to clinical work hours among first-year residents. Journal of the American Medical Informatics Association. 2022;29(1):120-127. doi:10.1093/jamia/ocab253

116. Dugas M, Fritz F, Krumm R, Breil B. Automated UMLS-Based Comparison of Medical Forms. Yener B, ed. PLoS ONE. 2013;8(7):e67883. doi:10.1371/journal.pone.0067883

117. Modre-Osprian R, Gruber K, Kreiner K, Poelzl G, Kastner P. Textual Analysis of Collaboration Notes of the Telemedical Heart Failure Network HerzMobil Tirol. In: Hayn D, Schreier G, Ammenwerth E, Hörbst A, eds. eHealth2015 - Health Informatics Meets eHealth. Vol 212. ; 2015:57-64.

118. Chen B, Alrifai W, Gao C, et al. Mining tasks and task characteristics from electronic health record audit logs with unsupervised machine learning. Journal of the American Medical Informatics Association. 2021;28(6):1168-1177. doi:10.1093/jamia/ocaa338

119. Rajkomar A, Yim J, Grumbach K, Parekh A. Weighting Primary Care Patient Panel Size: A Novel Electronic Health Record-Derived Measure Using Machine Learning. JMIR MEDICAL INFORMATICS. 2016;4(4):3-15. doi:10.2196/medinform.6530

120. Stead WW. Clinical Implications and Challenges of Artificial Intelligence and Deep Learning. JAMA. 2018;320(11):1107-1108. doi:10.1001/jama.2018.11029

121. Sohn S, Wang Y, Wi CI, et al. Clinical documentation variations and NLP system portability: a case study in asthma birth cohorts across institutions. J Am Med Inform Assoc. 2017;25(3):353-359. doi:10.1093/jamia/ocx138

122. Peng Y, Yan S, Lu Z. Transfer Learning in Biomedical Natural Language Processing: An Evaluation of BERT and ELMo on Ten Benchmarking Datasets. arXiv:190605474 [cs]. Published online June 18, 2019. Accessed January 9, 2022. http://arxiv.org/abs/1906.05474

123. Kocaballi AB, Ijaz K, Laranjo L, et al. Envisioning an artificial intelligence documentation assistant for future primary care consultations: A co-design study with general practitioners. J Am Med Inform Assoc. 2020;27(11):1695-1704. doi:10.1093/jamia/ocaa131

124. Gerke S, Minssen T, Cohen G. Chapter 12 - Ethical and legal challenges of artificial intelligence-driven healthcare. In: Bohr A, Memarzadeh K, eds. Artificial Intelligence in Healthcare. Academic Press; 2020:295-336. doi:10.1016/B978-0-12-818438-7.00012-5

125. Wu T, He S, Liu J, et al. A Brief Overview of ChatGPT: The History, Status Quo and Potential Future Development. IEEE/CAA J Autom Sinica. 2023;10(5):1122-1136. doi:10.1109/JAS.2023.123618

126. OpenAI. GPT-4 Technical Report. Published online 2023. doi:10.48550/ARXIV.2303.08774

127. Singhal K, Tu T, Gottweis J, et al. Towards Expert-Level Medical Question Answering with Large Language Models. Published online 2023. doi:10.48550/ARXIV.2305.09617

128. Lindvall C, Deng C, Moseley E, et al. Natural Language Processing to Identify Advance Care Planning Documentation in a Multisite Pragmatic Clinical Trial. Journal of Pain and Symptom Management. 2022;63(1):E29-E36. doi:10.1016/japainsymman.2021.06.025

129. Afshar M, Phillips A, Karnik N, et al. Natural language processing and machine learning to identify alcohol misuse from the electronic health record in trauma patients: development and internal validation. Journal of the American Medical Informatics Association. 2019;26(3):254-261. doi:10.1093/jamia/ocy166

130. Afzal Z, Schuemie M, van Blijderveen J, Sen E, Sturkenboom M, Kors J. Improving sensitivity of machine learning methods for automated case identification from free-text electronic medical records. BMC Medical Informatics and Decision Making. 2013;13. doi:10.1186/1472-6947-13-30

131. Annapragada A, Donaruma-Kwoh M, Starosolski Z. A natural language processing and deep learning approach to identify child abuse from pediatric electronic medical records. PLOS ONE. 2021;16(2). doi:10.1371/journal.pone.0247404

132. Brandt C.A., Elizabeth Workman T., Farmer M.M., et al. Documentation of screening for firearm access by healthcare providers in the veterans healthcare system: A retrospective study. Western Journal of Emergency Medicine. 2021;22(3):525-532. doi:10.5811/westjem.2021.4.51203

133. Burnett A, Chen N, Zeritis S, et al. Machine learning algorithms to classify self-harm behaviours in New South Wales Ambulance electronic medical records: A retrospective study. International Journal of Medical Informatics. 2022;161. doi:10.1016/j.ijmedinf.2022.104734

134. Caccamisi A, Jorgensen L, Dalianis H, Rosenlund M. Natural language processing and machine learning to enable automatic extraction and classification of patients’ smoking status from electronic medical records. Upsala Journal of Medical Sciences. 2020;125(4):316-324. doi:10.1080/03009734.2020.1792010

135. Carson N, Mullin B, Sanchez M, et al. Identification of suicidal behavior among psychiatrically hospitalized adolescents using natural language processing and machine learning of electronic health records. PLOS ONE. 2019;14(2). doi:10.1371/journal.pone.0211116

136. Chase H, Mitrani L, Lu G, Fulgieri D. Early recognition of multiple sclerosis using natural language processing of the electronic health record. BMC MEDICAL INFORMATICS AND DECISION MAKING. 2017;17. doi:10.1186/s12911-017-0418-4

137. Chen T, Dredze M, Weiner J, Hernandez L, Kimura J, Kharrazi H. Extraction of Geriatric Syndromes From Electronic Health Record Clinical Notes: Assessment of Statistical Natural Language Processing Methods. JMIR Medical Informatics. 2019;7(1). doi:10.2196/13039

138. Chilman N, Song X, Roberts A, et al. Text mining occupations from the mental health electronic health record: a natural language processing approach using records from the Clinical Record Interactive Search (CRIS) platform in south London, UK. BMJ Open. 2021;11(3). doi:10.1136/bmjopen-2020-042274

139. Cohen A, Chamberlin S, Deloughery T, et al. Detecting rare diseases in electronic health records using machine learning and knowledge engineering: Case study of acute hepatic porphyria. PLOS ONE. 2020;15(7). doi:10.1371/journal.pone.0235574

140. Corey K, Kashyap S, Lorenzi E, et al. Development and validation of machine learning models to identify high-risk surgical patients using automatically curated electronic health record data (Pythia): A retrospective, single-site study. PLOS Medicine. 2018;15(11). doi:10.1371/journal.pmed.1002701

141. Denny J, Choma N, Peterson J, et al. Natural Language Processing Improves Identification of Colorectal Cancer Testing in the Electronic Medical Record. Medical Decision Making. 2012;32(1):188-197. doi:10.1177/0272989X11400418

142. Diao X, Huo Y, Yan Z, et al. An Application of Machine Learning to Etiological Diagnosis of Secondary Hypertension: Retrospective Study Using Electronic Medical Records. JMIR Medical Informatics. 2021;9(1). doi:10.2196/19739

143. Egleston B, Bai T, Bleicher R, Taylor S, Lutz M, Vucetic S. Statistical inference for natural language processing algorithms with a demonstration using type 2 diabetes prediction from electronic health record notes. Biometrics. 2021;77(3):1089-1100. doi:10.1111/biom.13338

144. Elkin P, Mullin S, Mardekian J, et al. Using Artificial Intelligence With Natural Language Processing to Combine Electronic Health Record’s Structured and Free Text Data to Identify Nonvalvular Atrial Fibrillation to Decrease Strokes and Death: Evaluation and Case-Control Study. Journal of Medical Internet Research. 2021;23(11). doi:10.2196/28946

145. Chen L, Song L, Shao Y, Li D, Ding K. Using natural language processing to extract clinically useful information from Chinese electronic medical records. International Journal of Medical Informatics. 2019;124:6-12. doi:10.1016/j.ijmedinf.2019.01.004

146. Wang SY, Huang J, Hwang H, Hu W, Tao S, Hernandez-Boussard T. Leveraging weak supervision to perform named entity recognition in electronic health records progress notes to identify the ophthalmology exam. Int J Med Inform. 2022;167:104864. doi:10.1016/j.ijmedinf.2022.104864

147. Yu Z, Yang X, Guo Y, Bian J, Wu Y. Assessing the Documentation of Social Determinants of Health for Lung Cancer Patients in Clinical Narratives. Front Public Health. 2022;10:778463. doi:10.3389/fpubh.2022.778463

148. Ge W, Alabsi H, Jain A, et al. Identifying Patients With Delirium Based on Unstructured Clinical Notes: Observational Study. JMIR Form Res. 2022;6(6):e33834. doi:10.2196/33834

149. Mashima Y, Tamura T, Kunikata J, et al. Using Natural Language Processing Techniques to Detect Adverse Events From Progress Notes Due to Chemotherapy. Cancer Inform. 2022;21:11769351221085064. doi:10.1177/11769351221085064

150. Wu DW, Bernstein JA, Bejerano G. Discovering monogenic patients with a confirmed molecular diagnosis in millions of clinical notes with MonoMiner. Genet Med. Published online 2022. doi:10.1016/j.gim.2022.07.008

151. Masukawa K, Aoyama M, Yokota S, et al. Machine learning models to detect social distress, spiritual pain, and severe physical psychological symptoms in terminally ill patients with cancer from unstructured text data in electronic medical records. Palliative Medicine. doi:10.1177/02692163221105595

152. Yuan Q, Cai T, Hong C, et al. Performance of a Machine Learning Algorithm Using Electronic Health Record Data to Identify and Estimate Survival in a Longitudinal Cohort of Patients With Lung Cancer. JAMA NETWORK OPEN. 2021;4(7). doi:10.1001/jamanetworkopen.2021.14723

153. Parikh R, Linn K, Yan J, et al. A machine learning approach to identify distinct subgroups of veterans at risk for hospitalization or death using administrative and electronic health record data. PLOS ONE. 2021;16(2). doi:10.1371/journal.pone.0247203

154. Yang Z, Pou-Prom C, Jones A, et al. Assessment of Natural Language Processing Methods for Ascertaining the Expanded Disability Status Scale Score From the Electronic Health Records of Patients With Multiple Sclerosis: Algorithm Development and Validation Study. JMIR Medical Informatics. 2022;10(1). doi:10.2196/25157

155. Zhou S, Fernandez-Gutierrez F, Kennedy J, et al. Defining Disease Phenotypes in Primary Care Electronic Health Records by a Machine Learning Approach: A Case Study in Identifying Rheumatoid Arthritis. PLOS ONE. 2016;11(5). doi:10.1371/journal.pone.0154515

156. Hatef E, Rouhizadeh M, Nau C, et al. Development and assessment of a natural language processing model to identify residential instability in electronic health records’ unstructured data: a comparison of 3 integrated healthcare delivery systems. JAMIA OPEN. 2022;5(1). doi:10.1093/jamiaopen/ooac006

157. Leiter R, Santus E, Jin Z, et al. Deep Natural Language Processing to Identify Symptom Documentation in Clinical Notes for Patients With Heart Failure Undergoing Cardiac Resynchronization Therapy. Journal of Pain and Symptom Management. 2020;60(5):948-+. doi:10.1016/j.jpainsymman.2020.06.010

158. Montoto C, Gisbert J, Guerra I, et al. Evaluation of Natural Language Processing for the Identification of Crohn Disease-Related Variables in Spanish Electronic Health Records: A Validation Study for the PREMONITION-CD Project. JMIR Medical Informatics. 2022;10(2). doi:10.2196/30345

159. Landsman D, Abdelbasit A, Wang C, et al. Cohort profile: St. Michael’s Hospital Tuberculosis Database (SMH-TB), a retrospective cohort of electronic health record data and variables extracted using natural language processing. PLOS ONE. 2021;16(3). doi:10.1371/journal.pone.0247872

160. Van Vleck T, Chan L, Coca S, et al. Augmented intelligence with natural language processing applied to electronic health records for identifying patients with non-alcoholic fatty liver disease at risk for disease progression. INTERNATIONAL JOURNAL OF MEDICAL INFORMATICS. 2019;129:334-341. doi:10.1016/j.ijmedinf.2019.06.028

161. Ogunyemi O, Gandhi M, Lee M, et al. Detecting diabetic retinopathy through machine learning on electronic health record data from an urban, safety net healthcare system. JAMIA Open. 2021;4(3). doi:10.1093/jamiaopen/ooab066

162. Moehring R, Phelan M, Lofgren E, et al. Development of a Machine Learning Model Using Electronic Health Record Data to Identify Antibiotic Use Among Hospitalized Patients. JAMA Network Open. 2021;4(3). doi:10.1001/jamanetworkopen.2021.3460

163. Liu G, Xu Y, Wang X, et al. Developing a Machine Learning System for Identification of Severe Hand, Foot, and Mouth Disease from Electronic Medical Record Data. Scientific Reports. 2017;7. doi:10.1038/s41598-017-16521-z

164. Maarseveen T, Meinderink T, Reinders M, et al. Machine Learning Electronic Health Record Identification of Patients with Rheumatoid Arthritis: Algorithm Pipeline Development and Validation Study. JMIR Medical Informatics. 2020;8(11). doi:10.2196/23930

165. Wang Z, Shah A, Tate A, Denaxas S, Shawe-Taylor J, Hemingway H. Extracting Diagnoses and Investigation Results from Unstructured Text in Electronic Health Records by Semi-Supervised Machine Learning. PLOS ONE. 2012;7(1). doi:10.1371/journal.pone.0030412

166. Zhong Q, Mittal L, Nathan M, et al. Use of natural language processing in electronic medical records to identify pregnant women with suicidal behavior: towards a solution to the complex classification problem. European Journal of Epidemiology. 2019;34(2):153-162. doi:10.1007/s10654-018-0470-0

167. Ramesh J, Keeran N, Sagahyroon A, Aloul F. Towards Validating the Effectiveness of Obstructive Sleep Apnea Classification from Electronic Health Records Using Machine Learning. Healthcare. 2021;9(11). doi:10.3390/healthcare9111450

168. Zheng T, Xie W, Xu L, et al. A machine learning-based framework to identify type 2 diabetes through electronic health records. International Journal of Medical Informatics. 2017;97:120-127. doi:10.1016/j.ijmedinf.2016.09.014

169. Gustafson E, Pacheco J, Wehbe F, Silverberg J, Thompson W. A Machine Learning Algorithm for Identifying Atopic Dermatitis in Adults from Electronic Health Records. In: ; 2017:83-90. doi:10.1109/ICHI.2017.31

170. Rouillard C, Nasser M, Hu H, Roblin D. Evaluation of a Natural Language Processing Approach to Identify Social Determinants of Health in Electronic Health Records in a Diverse Community Cohort. Medical Care. 2022;60(3):248-255.

171. Sada Y, Hou J, Richardson P, El-Serag H, Davila J. Validation of Case Finding Algorithms for Hepatocellular Cancer From Administrative Data and Electronic Health Records Using Natural Language Processing. Medical Care. 2016;54(2):E9-E14. doi:10.1097/MLR.0b013e3182a30373

172. Hardjojo A, Gunachandran A, Pang L, et al. Validation of a Natural Language Processing Algorithm for Detecting Infectious Disease Symptoms in Primary Care Electronic Medical Records in Singapore. JMIR Medical Informatics. 2018;6(2):45-59. doi:10.2196/medinform.8204

173. Fernandez-Gutierrez F, Kennedy J, Cooksey R, et al. Mining Primary Care Electronic Health Records for Automatic Disease Phenotyping: A Transparent Machine Learning Framework. Diagnostics. 2021;11(10). doi:10.3390/diagnostics11101908

174. Hazlehurst B, Green C, Perrin N, et al. Using natural language processing of clinical text to enhance identification of opioid-related overdoses in electronic health records data. Pharmacoepidemiology and Drug Safety. 2019;28(8):1143-1151. doi:10.1002/pds.4810

175. Zhang Y, Wang X, Hou Z, Li J. Clinical Named Entity Recognition From Chinese Electronic Health Records via Machine Learning Methods. JMIR MEDICAL INFORMATICS. 2018;6(4):242-254. doi:10.2196/medinform.9965

176. Kim Y, Lee J, Choi S, et al. Validation of deep learning natural language processing algorithm for keyword extraction from pathology reports in electronic health records. Scientific Reports. 2020;10(1). doi:10.1038/s41598-020-77258-w

177. Wheater E, Mair G, Sudlow C, Alex B, Grover C, Whiteley W. A validated natural language processing algorithm for brain imaging phenotypes from radiology reports in UK electronic health records. BMC Medical Informatics and Decision Making. 2019;19(1). doi:10.1186/s12911-019-0908-7

178. Kogan E, Twyman K, Heap J, Milentijevic D, Lin J, Alberts M. Assessing stroke severity using electronic health record data: a machine learning approach. BMC Medical Informatics and Decision Making. 2020;20(1). doi:10.1186/s12911-019-1010-x

179. Zeng Z, Yao L, Roy A, et al. Identifying Breast Cancer Distant Recurrences from Electronic Health Records Using Machine Learning. JOURNAL OF HEALTHCARE INFORMATICS RESEARCH. 2019;3(3):283-299. doi:10.1007/s41666-019-00046-3

180. Marella W, Sparnon E, Finley E. Screening Electronic Health Record-Related Patient Safety Reports Using Machine Learning. Journal of Patient Safety. 2017;13(1):31-36. doi:10.1097/PTS.0000000000000104

181. Kim J, Hua M, Whittington R, et al. A machine learning approach to identifying delirium from electronic health records. JAMIA Open. 2022;5(2). doi:10.1093/jamiaopen/ooac042

182. Moon S, Carlson L, Moser E, et al. Identifying Information Gaps in Electronic Health Records by Using Natural Language Processing: Gynecologic Surgery History Identification. Journal of Medical Internet Research. 2022;24(1). doi:10.2196/29015

183. Okamoto K, Yamamoto T, Hiragi S, et al. Detecting Severe Incidents from Electronic Medical Records Using Machine Learning Methods. In: Vol 270. ; 2020:1247-1248. doi:10.3233/SHTI200385

184. Han S, Zhang R, Shi L, et al. Classifying social determinants of health from unstructured electronic health records using deep learning-based natural language processing. Journal of Biomedical Informatics. 2022;127. doi:10.1016/j.jbi.2021.103984

185. Wang X, Hripcsak G, Markatou M, Friedman C. Active Computerized Pharmacovigilance Using Natural Language Processing, Statistics, and Electronic Health Records: A Feasibility Study. Journal of the American Medical Informatics Association. 2009;16(3):328-337. doi:10.1197/jamia.M3028

186. Zheng L, Wang Y, Hao S, et al. Web-based Real-Time Case Finding for the Population Health Management of Patients With Diabetes Mellitus: A Prospective Validation of the Natural Language Processing-Based Algorithm With Statewide Electronic Medical Records. JMIR Medical Informatics. 2016;4(4):38-50. doi:10.2196/medinform.6328

187. Murff H, FitzHenry F, Matheny M, et al. Automated Identification of Postoperative Complications Within an Electronic Medical Record Using Natural Language Processing. JAMA. 2011;306(8):848-855. doi:10.1001/jama.2011.1204

188. Thompson J, Hu J, Mudaranthakam D, et al. Relevant Word Order Vectorization for Improved Natural Language Processing in Electronic Health Records. Scientific Reports. 2019;9. doi:10.1038/s41598-019-45705-y

189. Rybinski M, Dai X, Singh S, Karimi S, Nguyen A. Extracting Family History Information From Electronic Health Records: Natural Language Processing Analysis. JMIR Medical Informatics. 2021;9(4). doi:10.2196/24020

190. Kormilitzin A, Vaci N, Liu Q, Nevado-Holgado A. Med7: A transferable clinical natural language processing model for electronic health records. Artificial Intelligence in Medicine. 2021;118. doi:10.1016/j.artmed.2021.102086

191. Zhao Y, Fu S, Bielinski S, et al. Natural Language Processing and Machine Learning for Identifying Incident Stroke From Electronic Health Records: Algorithm Development and Validation. Journal of Medical Internet Research. 2021;23(3). doi:10.2196/22951

192. Escudie J.-B., Jannot A.-S., Zapletal E., et al. Reviewing 741 patients records in two hours with FASTVISU. AMIA Annual Symposium Proceedings. 2015;2015((Escudie, Jannot, Cohen, Burgun, Rance) University Hospital Georges Pompidou (HEGP); AP-HP, Paris, France; INSERM; UMRS1138, Paris Descartes University, Paris, France(Zapletal, Malamut) University Hospital Georges Pompidou (HEGP); AP-HP, Paris, France):553-559.

193. Osborne J.D., Wyatt M., Westfall A.O., Willig J., Bethard S., Gordon G. Efficient identification of nationally mandated reportable cancer cases using natural language processing and machine learning. Journal of the American Medical Informatics Association. 2016;23(6):1077-1084. doi:10.1093/jamia/ocw006

194. Forsyth A.W., Barzilay R., Hughes K.S., et al. Machine Learning Methods to Extract Documentation of Breast Cancer Symptoms From Electronic Health Records. Journal of Pain and Symptom Management. 2018;55(6):1492-1499. doi:10.1016/j.jpainsymman.2018.02.016

195. Penrod N.M., Lynch S., Thomas S., Seshadri N., Moore J.H. Prevalence and characterization of yoga mentions in the electronic health record. Journal of the American Board of Family Medicine. 2019;32(6):790-800. doi:10.3122/jabfm.2019.06.190115

196. Sagheb E., Ramazanian T., Tafti A.P., et al. Use of Natural Language Processing Algorithms to Identify Common Data Elements in Operative Notes for Knee Arthroplasty. Journal of Arthroplasty. 2021;36(3):922-926. doi:10.1016/j.arth.2020.09.029

197. Zhu Y., Sha Y., Wu H., Li M., Hoffman R.A., Wang M.D. Proposing Causal Sequence of Death by Neural Machine Translation in Public Health Informatics. IEEE Journal of Biomedical and Health Informatics. 2022;26(4):1422-1431. doi:10.1109/JBHI.2022.3163013

198. Chen J, Druhl E, Polepalli Ramesh B, et al. A Natural Language Processing System That Links Medical Terms in Electronic Health Record Notes to Lay Definitions: System Development Using Physician Reviews. J Med Internet Res. 2018;20(1):e26. doi:10.2196/jmir.8669

199. Cruz N, Canales L, Munoz J, Perez B, Arnott I. Improving Adherence to Clinical Pathways Through Natural Language Processing on Electronic Medical Records. In: Vol 264. ; 2019:561-565. doi:10.3233/SHTI190285

200. Schmeelk S, Dogo MS, Peng Y, Patra BG. Classifying Cyber-Risky Clinical Notes by Employing Natural Language Processing. Proc Annu Hawaii Int Conf Syst Sci. 2022;2022:4140-4146. doi:10.24251/hicss.2022.505

201. Schaye V, Guzman B, Burk-Rafel J, et al. Development and Validation of a Machine Learning Model for Automated Assessment of Resident Clinical Reasoning Documentation. Journal of General Internal Medicine. 2022;37(9):2230-2238. doi:10.1007/s11606-022-07526-0

202. Uyeda A, Curtis J, Engelberg R, et al. Mixed-methods evaluation of three natural language processing modeling approaches for measuring documented goals-of-care discussions in the electronic health record. Journal of Pain and Symptom Management. 2022;63(6):E713-E723. doi:10.1016/j.jpainsymman.2022.02.006

203. Schwartz J, Tseng E, Maruthur N, Rouhizadeh M. Identification of Prediabetes Discussions in Unstructured Clinical Documentation: Validation of a Natural Language Processing Algorithm. JMIR Medical Informatics. 2022;10(2). doi:10.2196/29803

204. Hazlehurst B, Sittig D, Stevens V, et al. Natural language processing in the electronic medical record: Assessing clinician adherence to tobacco treatment guidelines. American Journal of Preventative Medicine. 2005;29(5):434-439. doi:10.1016/j.amepre.2005.08.007

205. Lee R, Brumback L, Lober W, et al. Identifying Goals of Care Conversations in the Electronic Health Record Using Natural Language Processing and Machine Learning. Journal of Pain and Symptom Management. 2021;61(1):136-+. doi:10.1016/j.jpainsymman.2020.08.024

206. Gong JJ, Soleimani H, Murray SG, Adler-Milstein J. Characterizing styles of clinical note production and relationship to clinical work hours among first-year residents. J Am Med Inform Assoc. 2021;29(1):120-127. doi:10.1093/jamia/ocab253

Tables

Figure 1: PRISMA diagram of the study selection process

Figure 2: Distribution of studies by time and domain

Figure 3: Distribution of studies by domain

Author Biographies

Scott W. Perkins, BA, is a medical student at Cleveland Clinic Lerner College of Medicine of Case Western Reserve University.

Justin C. Muste, MD, is an ophthalmology resident at Cleveland Clinic Cole Eye Institute.

Taseen Alam, BS, is a medical student at Case Western Reserve University School of Medicine.

Rishi P. Singh, MD, is Vice President and Chief Medical Officer of Martin North and South Hospitals, and Professor of Ophthalmology at Cleveland Clinic Lerner College of Medicine.